Evaluating deployments in spaces

Configure watsonx.governance evaluations in your deployment spaces to gain insights about your model performance. When you configure evaluations, you can analyze evaluation results and model transaction records directly in your spaces.

watsonx.governance evaluates your model deployments to help you measure performance and understand your model predictions. When you configure model evaluations, watsonx.governance generates metrics for each evaluation that provide different insights that you can review. watsonx.governance also logs the transactions that are processed during evaluations to help you understand how your model predictions are determined. For more information, see Evaluating AI models with Watson OpenScale.

If you have an instance of watsonx.governance provisioned, you can seamlessly create an online deployment, then monitor the deployment results for fairness, quality, drift, and explainability.

A typical scenario follows this sequence:

- Create a deployment space and associate a watsonx.governance instance with the space to enable all the monitoring capabilities. You can choose the type of space, for example production, or pre-production, depending on your requirements.

- Promote a trained machine learning model and input (payload) data to the deployment space and create an online deployment for the model.

- From the deployment Test tab, provide the input data and get predictions back.

- From the Evaluations tab, configure the evaluation to monitor your deployment for quality, fairness, and explainability. Provide all the required model details so that Watson OpenScale can connect to the model, the training and payload data, and to a repository for storing evaluation results.

- Configure a monitor for fairness to make sure that your model is producing unbiased results. Select fields to monitor for fairness, then set thresholds to measure predictions for a monitored group compared to a reference group. For example, you can evaluate your model to make sure that it is providing unbiased predictions based on gender.

- Configure a monitor for quality to determine model performance based on the number of correct outcomes that are produced by the model based on labeled test data that is called Feedback data. Set quality thresholds to track when a metric value is outside an acceptable range.

- Configure a monitor for drift to make sure that your deployments are up-to-date and consistent. Use feature importance to determine the impact of feature drift on your model. For example, a small amount of drift in an important feature can have a bigger impact on your model than a moderate amount of drift in a less important feature.

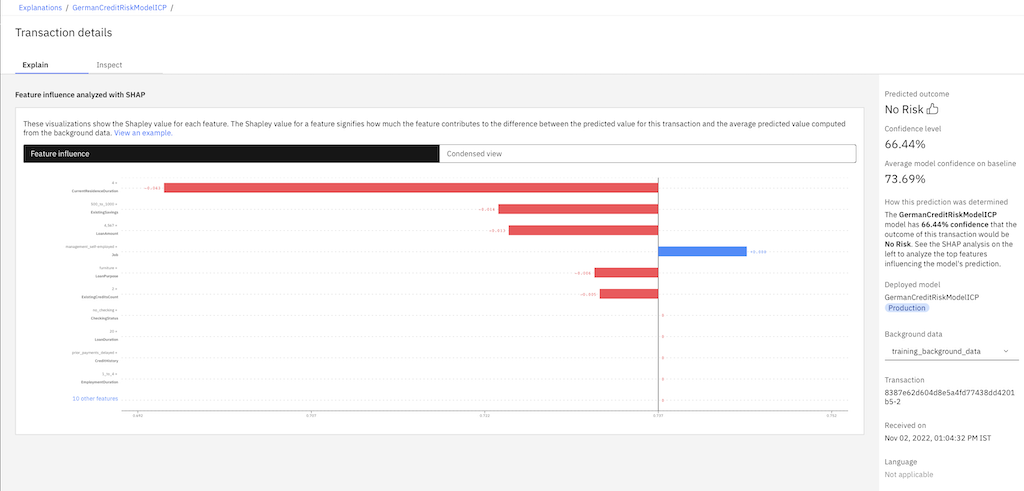

- You can monitor your deployment results for explainability to understand the factors that led the model to determine a prediction. Choose the explanation method that is best suited to your needs. For example, you can choose SHAP (Shapley Additive EXplanations) method for thorough explanations or LIME (Local Interpretable Model-Agnostic Explanations) method for faster explanations.

- Finally, you can inspect your model evaluations to find areas where small changes to a few inputs would result in a different decision. Test scenarios to determine whether changing inputs can improve model performance.

The following sections describe how to configure watsonx.governance evaluations and review model insights in your deployment spaces:

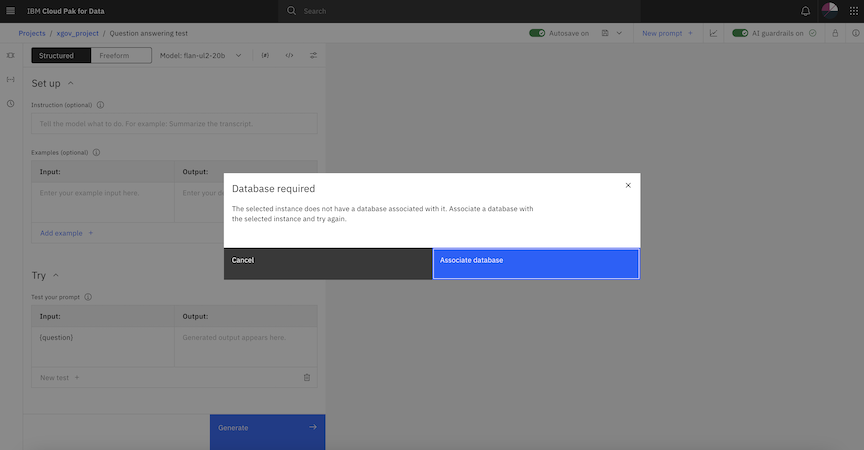

Preparing to evaluate models in spaces

If you don't have a database that is associated with your watsonx.governance instance, you must associate a database before you can run evaluations. To associate a database, you must also click Associate database in the Database required dialog box to connect to a database. You must be assigned the Admin role for your project and watsonx.governance instance to associate databases.

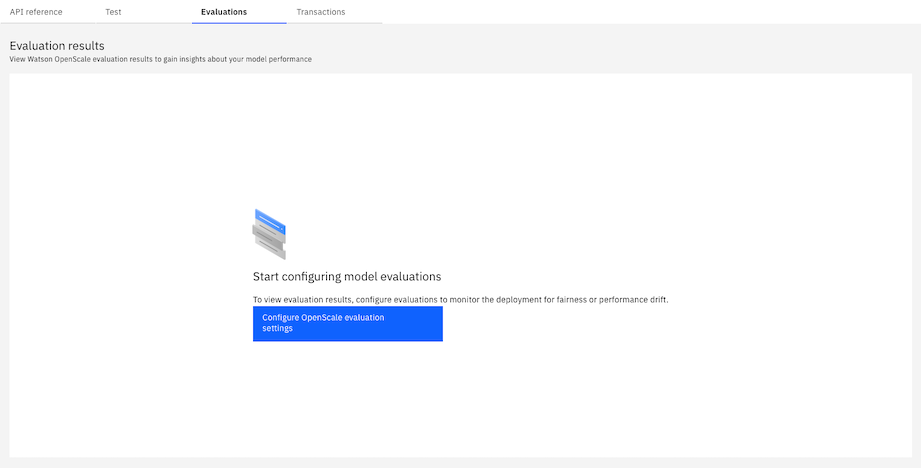

Configuring Watson OpenScale evaluations in spaces

After you associate your Watson OpenScale instance, you can select deployments to view the Evaluations and Transactions tabs that you can use to configure evaluations and review model insights. To start configuring model evaluations in your space, you can select Configure OpenScale evaluation settings to open a wizard that provides a guided series of steps.

You can evaluate online deployments only in your deployment space.

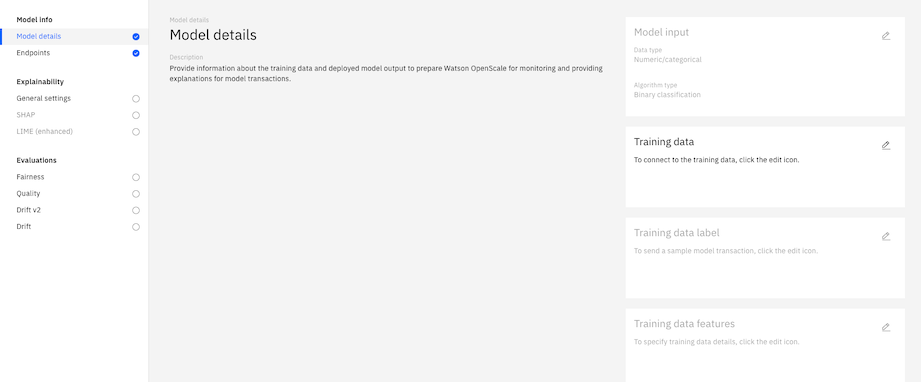

Providing model details

To configure model evaluations, you must provide model details to enable watsonx.governance to understand how your model is set up. You must provide details about your training data and your model output.

For more information, see Providing model details.

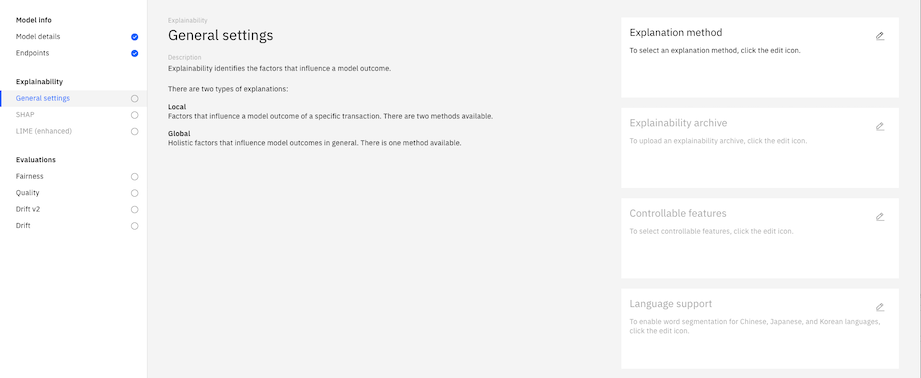

Configuring explainability

You can configure explainability in watsonx.governance to reveal which features contribute to the outcome predicted by your model for a transaction and predict what changes would result in a different outcome. You can choose to configure local explanations to analyze the impact of factors for specific model transactions and configure global explanations to analyze general factors that impact model outcomes.

For more information, see Configuring explainability.

Configuring fairness evaluations

You can configure fairness evaluations to determine whether your model produces biased outcomes for different groups. To configure fairness evaluations, you can specify the reference group that you expect to represent favorable outcomes and the fairness metrics that you want to use. You can also select features that are compared to the reference group to evaluate them for bias.

For more information, see Configuring fairness evaluations.

Configuring quality evaluations

You can configure quality evaluations to understand how well your model predicts accurate outcomes. To configure quality evaluations, you must specify thresholds for each metric to enable watsonx.governance to identify when model quality declines.

For more information, see Configuring quality evaluations.

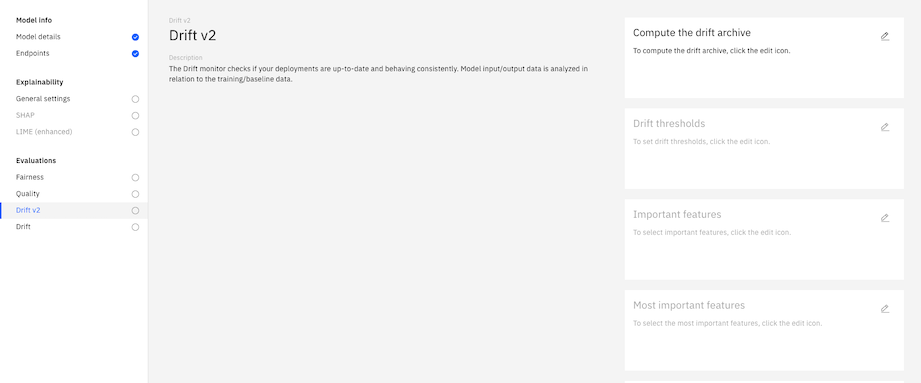

Configuring drift v2 evaluations

You can configure drift v2 evaluations to measure changes in your data over time to make sure that you get consistent outcomes for your model. To configure drift v2 evaluations, you must set thresholds that enable watsonx.governance to identify changes in your model output, the accuracy of your predictions, and the distribution of your input data. You must also select important features to enable watsonx.governance to measure the change in value distribution.

For more information, see Configuring drift v2 evaluations.

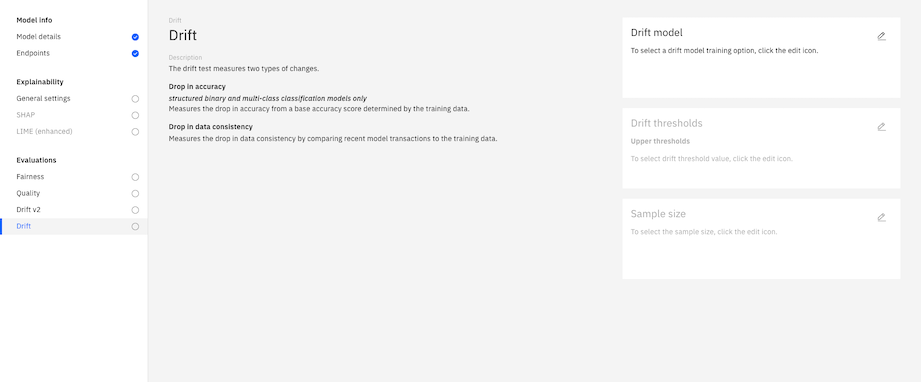

Configuring drift evaluations

You can configure drift evaluations to enable watsonx.governance to detect drops in accuracy and data consistency in your model. To configure drift evaluations, you must set thresholds to enable watsonx.governance to establish an accuracy and consistency baseline for your model.

For more information, see Configuring drift evaluations.

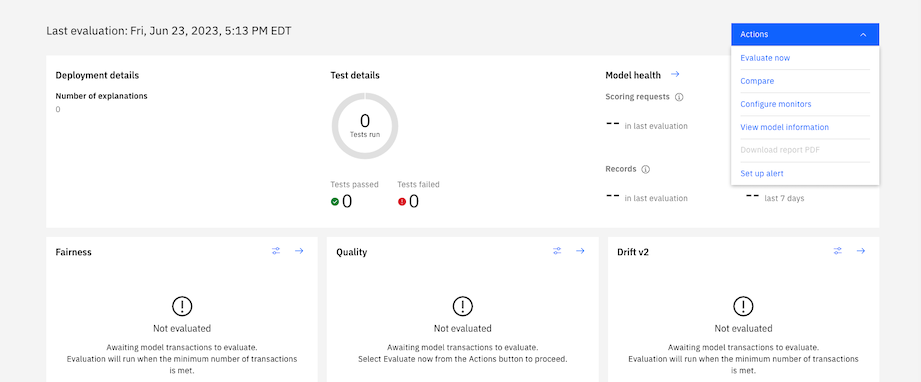

Running evaluations

After you configure evaluations, you can close the wizard to run the evaluations. To run the evaluations, you must select Evaluate now in the Actions menu on the Evaluations tab to send model transactions.

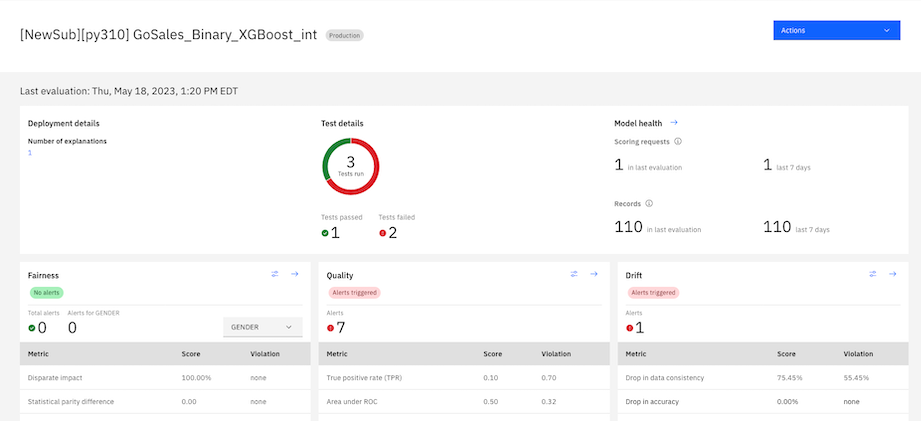

Reviewing evaluation results

You can analyze evaluation results on the Evaluations tab to gain insights about your model performance. To analyze evaluation results, you can click the arrow  in an evaluation section or use the Actions menu to view details about your model.

in an evaluation section or use the Actions menu to view details about your model.

For more information, see Reviewing evaluation results.

Reviewing model transactions

You can analyze model transactions on the Transactions tab to understand how your model predicts outcomes and predict what changes might cause different outcomes. To analyze transactions, you can choose to view explanations that provide details about how your model predictions are determined.

For more information, see Explaining model transactions.

Parent topic: Managing predictive deployments